If you warm up your application to improve its performance, you know that the time it takes to complete application initialization can become a challenge.

The more warmup tasks and data your application needs, the longer the startup delay.

Long startup delay can become its own problem if your application restarts in production. Thankfully, you can use the approach in our Maximum IIS application pool availability with Application Initialization guide to perform the warmup without your users experiencing a delay after a recycle.

However, it’s still a good idea to speed up your application initialization. Aside from reducing the risk of production delays, another big reason is that it this allows your application to enter service faster when scaling up your web farm.

In this post, we’ll go over several battle-tested techniques to reduce your warmup time (without giving up your warmup).

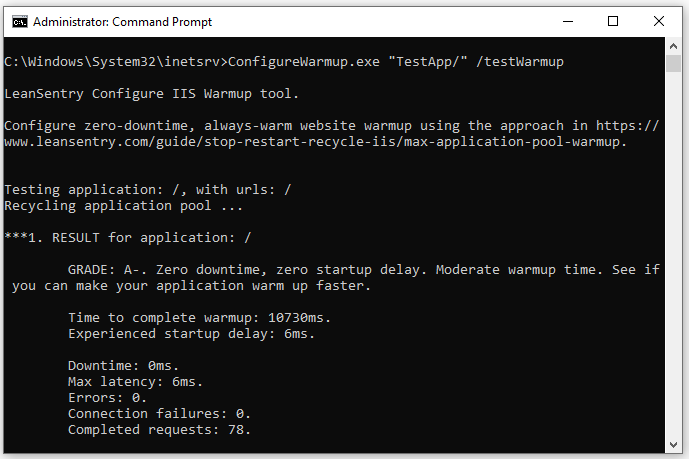

BONUS: We’ll also show you how you can use our ConfigureWarmup tool to test and optimize your start delay.

Where is the application warmup code?

Just to be clear, when I say application warmup, we are normally referring to application initialization code that runs when your application first comes into service.

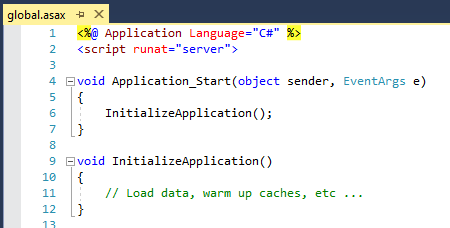

For most applications, this means code inside the Application_Start event, usually placed in global.asax:

IIS will call this code as part of application initialization, before the application processes it’s first request.

This code will also run if the application restarts, or if the application pool recycles.

If you are following the 100% warm, zero-startup delay approach we detail in the Max availability guide, IIS will also be starting your applications immediately even in the absence of requests to get your applications warm right away.

So, our goal here is to make this warmup code execute in as little time as possible.

Strategy #1: Do application initialization early

This does not speed up your app initialization, but rather makes sure it happens as early as possible. In fact, we want the app started as soon as the web server starts up, so by the time requests hit your website, the app has already completed all/most of its initialization.

(if it has not, it will of course before IIS allows it to process that first request)

You can see the full details on how to do this in the Max application pool availability guide.

Strategy #2: Speed up your warmup code

This is the main subject of this guide. Below are the code techniques we’ve used to successfully speed up application initialization times:

Parallelize application initialization tasks

I want to start by stating one fact: it is NOT a good idea to perform highly-parallel tasks in per-request code. This is a recipe for CPU and thread pool exhaustion, where N requests end up using up N x M threads and completely saturating the thread pool.

Avoid PLINQ/AsParallel in per-request code

We’ve seen an explosion of highly parallel code in the request after Microsoft released the Parallel Extensions (PLINQ) in .NET 4.0. In this code, people would often execute various data retrieval tasks with as high of a concurrency as possible, with code that looked like this:

var results = tasks.AsParallel().WithDegreeOfParallelism(10).Select( t => ... );

These types of applications would then end up 100% CPU usage as tens of requests began to execute code on 100s of threads, or would end up completely hung under load as hundreds of concurrent threads got blocked by synchronous data retrieval tasks.

Every other time I would look at a high CPU hang in LeanSentry, it would be due to the concurrency-happy AsParallel() or ForAll() code.

So we strongly advise NOT to use highly parallel tasks, especially with synchronous tasks, in per-request application code.

That said, application warmup is not per-request code! In fact, at the time your app is warming up, all requests are actually blocked waiting for the application.

So, it’s in your best interest to use as much parallelism as possible to get all your data loaded concurrently and as soon as possible.

So, if you got multiple data retrieval tasks, do them all in parallel. We recommend the following pattern using the more recent async task syntax:

// Helper method to queue our task to the thread pool for greater sync code parallelism

private void Parallel(Task task)

{

return Task.Run(() => task.ConfigureAwait(false));

}

// In our initialization code:

var tasks = new List<Task>();

tasks.Add(Parallel(LoadFirstData()));

tasks.Add(Parallel(LoadSecondData()));

// add more tasks

// Synchronously wait for the initialization to complete:

var result = Task.WhenAll(tasks).Result;

// NOTE: If we were in async code, we would do this: await Task.WhenAll(tasks);

Without getting into much detail on the async portion of this code, what we are after here is:

- Execute tasks in parallel (esp. if they contain CPU-bound code).

This is what the Parallel method above accomplishes by queueing the task to the thread pool with Task.Run. This way, we are truly parallelizing the executing of all tasks whether they are CPU or IO-bound.

- Minimize the thread pool usage for IO-bound, blocking tasks.

As long as they are proper asynchronous tasks, each will release the thread back to the thread pool while it waits for data.

Note that we are less concerned here with the thread pool usage because app initialization is happening only once as opposed to request execution.

- Prevent serialization to sync context by using ConfigureAwait(false). We are not worried about running each task on the same thread context, so we tell each task explicitly not to try to complete itself back on the original thread. More on ConfigureAwait here.

Load only the data you need

This is an obvious one, but often ignored in many applications.

If your application needs to cache a list of products for example, it may end up loading A LOT more data in each Product class than is actually used by the users in the cache.

This can significantly impact performance esp. if the Products table has certain columns/fields that contain large data, e.g. a binary or a large nvarchar/string column, which is not being used by your application.

As a result, you pay several important penalties:

- Your application uses A LOT more memory than needed.

- You may experience significantly higher Garbage collection overhead.

- Your application initialization takes a lot longer to complete, due to loading a lot more data over the network and the additional SQL query execution delays for loading the related entities.

Let’s take a look at two problematic scenarios.

In some cases, e.g. with the older LINQ to SQL, your “load products” query may be bringing back multiple other entities that have a referential relationship with the Product entity. This happens when you have configured LoadWith() eager loading relationships like this:

var dbContext = new MyDataContext(connection); var options = new DataLoadOptions(); options.LoadWith<Product>(s => s.Prices); options.LoadWith<Product>(s => s.Options); // other LoadWith associations dbContext.LoadOptions = options;

When you retrieve the Products from db, you are also going to be retrieving all these child entity relationships, and all their fields which you may or may not need.

Flipping the scenario, if you are using Entity Framework, you are not going to be eagerly fetching any related entities by default. Instead, any access to related entities will trigger lazy loading, or a separate query to the database to retrieve the relationship.

This can cause significantly more queries and significantly longer execution times for your application warmup.

A good solution, in both cases, is to use an approach called Projection. With projection, you are in full control of both (a) the fields you retrieve for each Product and (b) any related entities you retrieve. You can retrieve the minimal set of data you intend to use in the cache, in a single query, giving you the best of both worlds.

For example:

var products = context.Products

.Select(p=> new CachedProduct

{

Id = p.Id,

Name = p.Name,

Options = p.Options.Select(o => new { Id = o.Id, Price = o.Price }).ToList()

}).ToList();

The main idea here is that we explicitly retrieve the fields from the Product entity and any related entities that we need in the cache, and nothing more. This creates a single join query that retrieves all the data in one shot, using all of the available indexes to speed up query execution.

Optimize your initialization code

This goes without saying, but the best way to speed something up is to measure its actual performance and then optimize the bottlenecks. Very often, the bottlenecks are going to be things you didn’t expect.

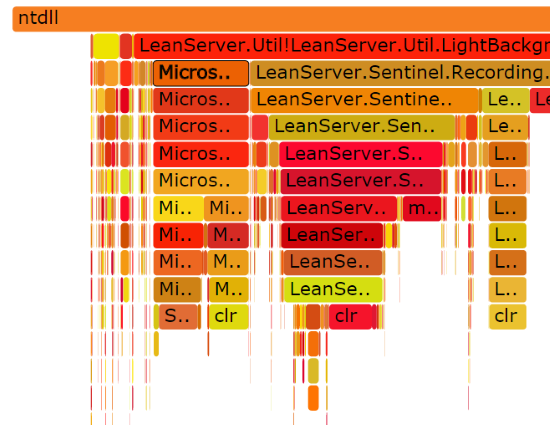

This is the central idea that LeanSentry is based on: diagnosing the actual bottlenecks as opposed to identifying all the possible things you can improve. For example, if your application is CPU bound during initialization, you’ll want to do a CPU profile during initialization and identify the code that you can optimize to speed it up.

Because you can simulate application initialization easily in a test environment (as opposed to diagnosing production issues, which you need to do in production), you can also use Visual Studio or any number of profiling tools to do the optimization. At the end of the day, you want to look at two things:

- Time taken by the different functions in your app warmup code.

- CPU usage of your warmup code.

If either is a problem, your profiling tool of choice should point out the places where you want to focus your optimization effort. Because this is different for each application, the rest is up to you!

Strategy #3: Implement background cache load

This method involves moving cache load from a startup-blocking task, to a background task that happens right after the application starts but without blocking incoming traffic.

Pros

- Reduce startup time by allowing the application to start right away (without waiting for the cache load)

- Still make sure that the application has a warm-enough cache very soon after starting.

Cons

- Cache is not warm for the first requests; can cause slower performance if hit by burst of requests right away

- Prevents you from always having a 100%-warm application when in service, if using application warmup approach from our Max application pool availability guide.

So, this approach has more value if you are NOT using the application warmup approach, and therefore experience too long of a startup delay when the app restarts in production.

Here a simple example of how this works, for loading our Products list into a cache in the background. We assume your application code can then retrieve the cached products by first checking the cache, and then cache-missing to DB if the product is not in the cache.

global.asax

private void Application_Start(Object source, EventArgs e)

{

Task.Run(() => LoadProductCache().ConfigureAwait(false));

}

private async Task LoadProductCache()

{

using (var context = new DbContext())

{

var products = await context.Products.ToListAsync();

foreach(var product in products)

{

ProductHelper.AddToCache(product);

}

}

}

And in your cache helper:

public static class ProductHelper

{

private static ConcurrentDictionary<Guid, Product> s_productCache = new ConcurrentDictionary<Guid, Product>();

// add the product to the cache

private void AddToCache(Product product)

{

s_productCache[product.Id] = product;

}

// retrieve the product from cache, optionally skipping DB lookup on cache miss

public static GetProduct(Guid productId, bool lookUpCacheMiss = true)

{

Product product = null;

if (s_productCache.TryGetValue(productId, out product))

{

return product;

}

// if not allowing cache misses, just return null

if (!lookUpCacheMiss)

{

return null;

}

// Look up directly in DB on cache miss, we may still be loading the background cache

// ...

// Store looked up value in the cache

s_productCache[productId] = product;

}

}

When using this approach, you are also likely going to want to implement the following enhancements:

- Store “misses” in the cache (e.g. a special “missing” Product instance), so that multiple requests for missing products don’t lead to excessive cache misses. You can store the “missing” entry with a timestamp to invalidate if you want to detect new items periodically, or you can store it forever if using periodic background load (so that your cache automatically brings in new items).

- Perform background load periodically, to update the data in the cache. This way, you never need to cache misses to db on stale data.

- Stream-load the data to populate the cache sooner as the data comes in, as opposed to after all the data has loaded.

Stream-loading the background cache

If your data load query is streaming, you can potentially speed up the background cache load by inserting cache records as soon as they come in, instead of after all have loaded.

Here is the modified version of our cache load that inserts incrementally:

await context.Students.Select( p => {

// insert the item as it's being loaded

ProductHelper.AddToCache(p);

return p;

}).ToListAsync();

You can combine this easily with the Projection technique from earlier to cache only an efficient subset of data.

Strategy #4: Cache shared data in a distributed cache

If you have many webservers, each of them will load caches separately whenever the application (re)starts on that server.

If you are deploying many instances concurrently, this could lead to an overloaded SQL server or backend database.

To combat this, you can store the loaded cache in an intermediate cache like Redis. You can use Redis locking to make sure only 1 instance loads the cache at any given time, or you can just allow a race between nodes to overwrite each other’s data. This could bank on the fact that

This way, you can have one application load the cache from the DB (expensive), and the others to just retrieve the cache from the Redis instance (cheap).

Strategy #5: Cache warmup data on disk

A version of the previous approach, but this time it’s local to the server. You can store the serialized cache dataset to a file on disk after you load it.

Then, if your app restarts, and you have a recent cache snapshot on disk, you can load it instead of loading from the DB. Then, your background load process can update from the db in the background.

Conclusion

We strongly recommend an application warmup approach detailed in the Max application pool availability guide to make your IIS website always warm, with a zero perceived “startup delay”.

However, it still pays to optimize your startup delay, because it allows you to reduce the time for your webserver instances to come online/enter service. This helps you when scaling up your web farm or cloud scaleset.

If your application initialization process takes a while, you can use the techniques in this guide to speed it up:

- Do application init early (as opposed to on first request).

- Parallelize initialization tasks (it’s OK unlike in per-request code).

- Load only the data you need (e.g. using EF or LINQ projection).

- Implement background cache load.

- Save/load cache to/from Redis or local filesystem.

- Profile and tune your startup code bottlenecks.

After applying the architecture techniques, you can test your warmup time with our ConfigureWarmup tool.

We hope this advice helps you reduce your startup time. If you have other techniques you’ve successfully used in your application, please share them in the comments!

Final thought: if you are spending a lot of time troubleshooting production performance issues: hangs, CPU overloads, memory leaks and the like, deploy LeanSentry to diagnose those issues down to the code quickly.