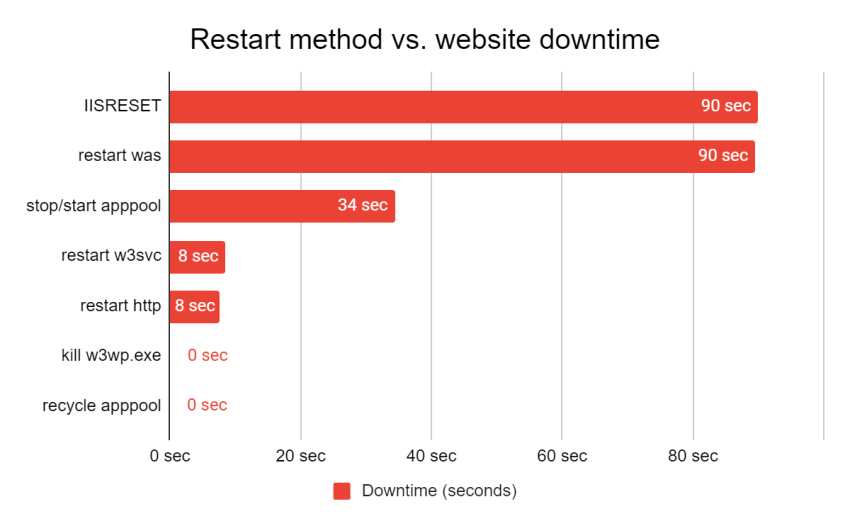

When we wrote the Reset, restart and recycle IIS websites guide, we wanted to illustrate the real-world impact of common ways to restart IIS services.

You can learn all about the theory till your face turns blue, but when the time comes to actually restart your apps in production, you will be sure to feel those real impacts.

This post provides the details of how we did the test, and explains the results.

For each restart command, we’ll also look at what happens, and explain WHY the impact of restarts and recycling is what it is:

Here are the commands we tested:

- IISRESET

- Restarting the Windows Process Activation Service (WPAS or WAS)

- Restarting the World Wide Web Publishing Service (W3SVC)

- Stopping and starting the IIS application pool

- Killing the IIS worker process

- Recycling the IIS application pool

Let’s go!

Test setup

When you see performance tests, they are usually done in pure isolation, to help you focus on the impact of a specific thing you are testing.

However, in production, that is not the case. Everything affects everything else, as you will see below.

The setup

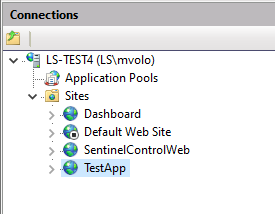

So, for our test setup, we mirrored a real production IIS web server that is running 3 sites, including the simple site we aimed to restart and 2 other production websites. This setup is similar to many real IIS websites we see with LeanSentry customers.

- TestApp: a simple website that we’ll be restarting.

- Dashboard: the LeanSentry dashboard website.

- ControlEndpoint: the high-traffic leansentry data endpoint that accepts performance data from our customers.

Goals

Our goal here will be to restart TestApp, the application with supposed performance issues, with the lowest downtime … while also trying to maintain availability for the other sites.

The traffic pattern

During the test, we’ll have a continuous traffic pattern that includes:

- A fast, high traffic page with 50 requests/second.

- A slower page that takes 10 seconds to finish, with 2 active requests at all times.

This is meant to simulate the typical mix of fast and slow requests that a production site may experience.

The test

We tried to restart the TestApp website to “fix it”, using all the different methods we have available.

Then, we measured the impact of each method on availability and performance of the site across the restart.

The metrics

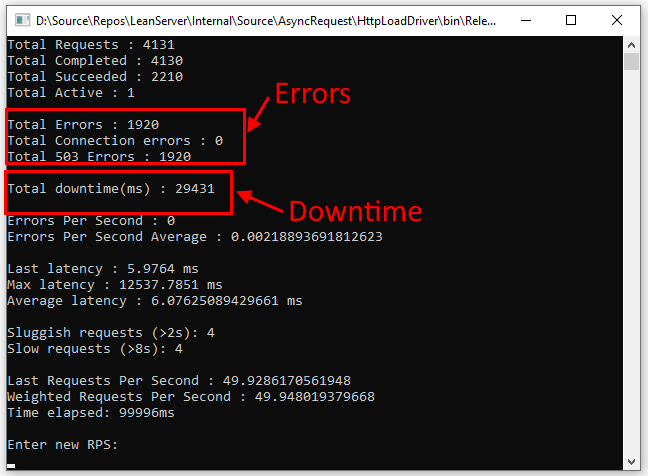

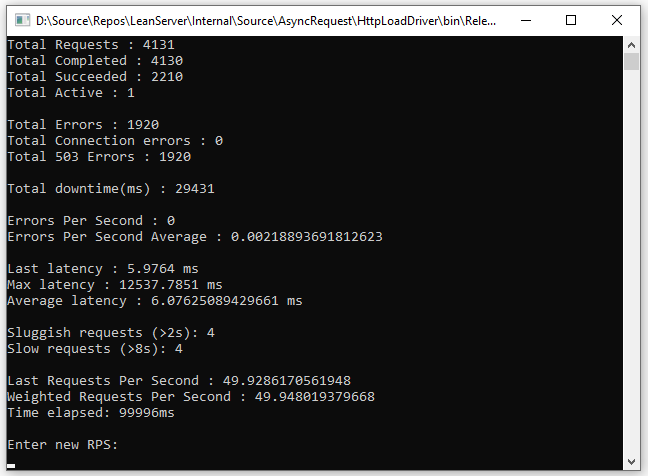

Here are the key metrics we tracked:

- Downtime: the total time the website is down, e.g. all requests are failing or the clients are failing to connect. We ignore any little blips here, and only count continuous downtime of 1s or more.

- Errors: the total number of requests failing across the restart, to connect or with 503 Service Unavailable.

Our ideal goal of course was to restart our website with 0 downtime and 0 failed requests.

Let’s get into the results!

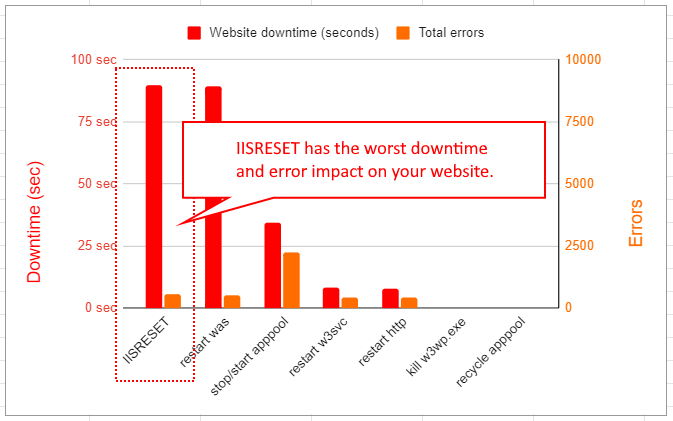

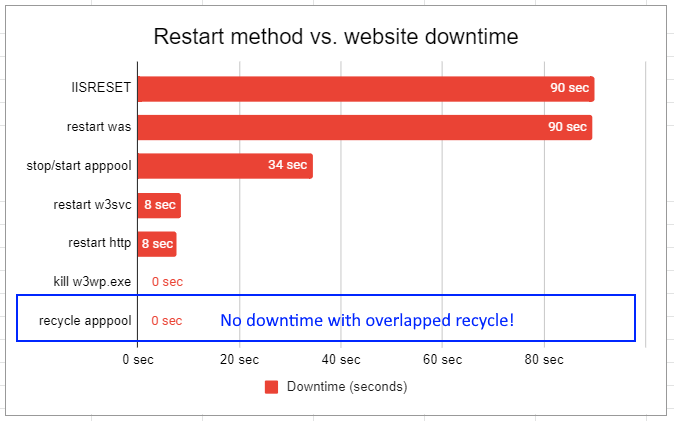

The results

We’ll look at each restart method we tried, and the result.

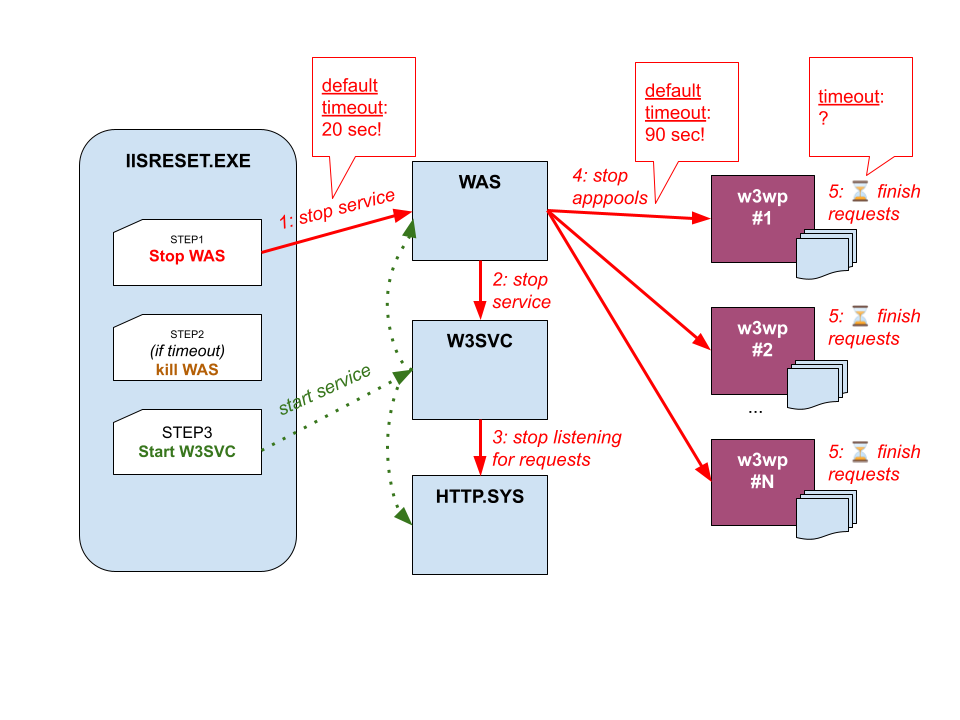

IISRESET

Oh boy. If you read our Reset, restart and recycle IIS websites guide and the detailed post on Dangers of IISRESET ... you already know this does not turn out well.

First, we could not use the default IISRESET command, because it has a 20 second timeout and would inevitably fail with “Access Denied” errors. Despite being run with As Administrator option.

What’s worse, it would leave the WAS/W3SVC services completely down on the server, resulting in permanent downtime.

(You can read the details on these issues and other problems caused by IISRESET in our Dangers of using IISRESET to restart IIS services post.)

So, we had to come up with a modified command that allowed us to complete the test. You can read all about this in Dangers of IISRESET, but here is the quick and dirty version:

iisreset /timeout:300

What happened

IISRESET stops W3SVC, which aborts any existing request connections … and returns connection errors for any new requests because HTTP.SYS stops listening on the website binding endpoints.

Then IISRESET stops WAS, which tries to stop all IIS application pools. This returns 503s for any queued requests and waits for each active worker process to stop up to the shutdown time limit. Because the default time limit is 90 seconds, this took 90 seconds because our other production sites had some very long running requests.

At the end, the worker processes that are still running were killed, and WAS completed the stop.

Results

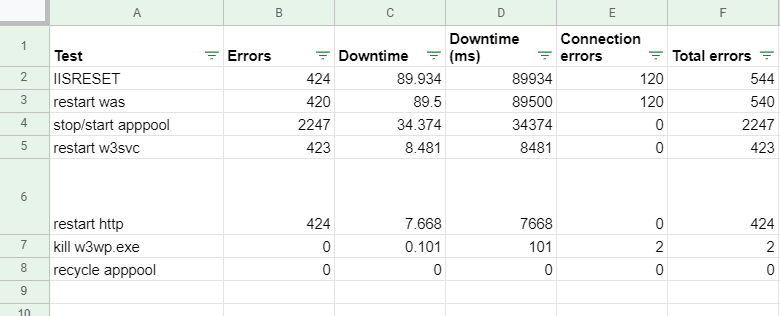

| Downtime | 89.9 seconds |

| 503 errors | 424 |

| Connection errors | 120 |

That’s a lot of downtime (for all websites) to justify for just restarting our TestApp site. In the final results, this was the worst option, but you probably already knew that.

Verdict: DON’T USE.

Restarting WAS

This was not much better, simply because restarting WAS is effectively what IISRESET does!

Here is the command:

net stop was /yes net start w3svc

What happened

Similar to IISRESET.

Results

| Downtime | 89.5 seconds |

| 503 errors | 420 |

| Connection errors | 120 |

Same as before, this option caused prolonged downtime for all websites. We consider restarting WAS as bad as IISRESET, with the only exception of being a more direct way to start/stop WAS and very slightly less prone to failure.

Verdict: DON’T USE.

Stop/start application pool

Now we are getting a bit smarter. Shutting down IIS services stops unrelated sites (and increases total downtime for everyone because we wait for other pools to stop).

Instead, this time we’ll shut down just the pool we need to shut down:

appcmd stop apppool TestApp /wait:true /timeout:180000 appcmd start apppool TestApp

What happened

Similar to stopping WAS, we waited for the TestApp application pool to stop. The worker process stopped before the shutdown time limit so the stop succeeded in about 30 seconds.

Unlike stopping WAS, this DID NOT affect the other sites and appools, and they continued to operate normally.

Results

| Downtime | 34.3 seconds |

| 503 errors | 2247 |

| Connection errors | 0 |

Let’s explain this:

- First, we have a lot more 503 errors, and no connection errors. This is because we didn’t stop W3SVC, so HTTP.SYS is still listening for and receiving requests to our website. However, because the pool is stopping, all these requests are getting rejected with 503 Service Unavailable.

- Second, we still have significant downtime, but a much lower 34 seconds compared to 90 seconds we had before. The reason for the improvement is that we are only waiting for the TestApp worker process to stop, and not waiting for the others.

Still, it’s a painful result that we’d like to avoid in production. So, let’s keep trying to do better.

Verdict: DON’T USE.

Kill IIS worker process (w3wp.exe)

Frustrated with the results, we get impatient and kill the worker process for our next test:

killwp.bat TestApp

What happened

This terminates the worker process without waiting for any existing requests to finish. Http.sys aborted any requests in flight with connection resets.

However, because our application has no startup delay, a new IIS worker process starts right away and begins to take on new requests.

Results

| Downtime | .1 seconds |

| 503 errors | 0 |

| Connection errors | 2 |

The results are surprisingly good! We had almost zero downtime, and just a few errors for the long running requests that got aborted halfway through.

It’s important to know two caveats:

- Process termination. Some applications do not respond well to worker process termination, due to file corruption or external resource locking that may prevent the new worker process from functioning. This will also happen if your process crashed, so you’ll definitely want to correct this for your application.

- Startup delay. Our test worker process does not do any application initialization, so it starts right away. For any production application that has a significant startup delay, the new worker process started by WAS would experience this delay. This could result in downtime.

So, we do not generally recommend killing your worker process for these reasons. It turns out we can do A LOT better still.

Verdict: OK, BUT WITH CAVEATS.

Recycle IIS application pool

IIS provides a better way to restart web applications: the IIS application pool overlapped recycle.

The overlapped recycle gives us the ability to create a fresh worker process to run our apps, and let the old process finish out any existing requests in the background.

Most importantly, IIS makes sure that the overlapped recycle does not lose any requests. So, it offers a zero downtime process for restarting IIS applications.

Let’s do it:

appcmd recycle apppool TestApp

What happened

A new worker process was started for TestApp right away, taking on new requests.

The old worker process finished out the active requests (remember that we had some 10s-long requests in the mix). Then, it gracefully shut down.

Results

| Downtime | 0 seconds |

| 503 errors | 0 |

| Connection errors | 0 |

You can’t do better than that!

Undoubtedly, recycling the application pool is almost always the right choice, since it literally has no visible impact on the application in question OR any other website on the server.

Costs of application pool recycling

Recycling IIS application pools can have costs, depending on your application. Be sure to check out hidden costs of application pool recycling in our IIS restart guide.

Verdict: YOUR BEST OPTION!

Other tests we did

We also conducted several other restart tests, results for which are shown in the earlier graph. We didn’t include a detailed workup of these because we don’t consider them viable options for restarting IIS sites most of the time, and they don’t add much to our discussion.

Here they are for completeness:

- Restart HTTP. There is really no point doing this. The HTTP.SYS kernel driver really never requires stopping, and in our testing it occasionally failed to stop, leaving the server in a broken state until the next server reboot. When it did restart successfully, it amounted to restarting WAS plus some other system services that depend on HTTP.

- Restart W3SVC. This had a very low downtime of ~8 seconds, simply because it did not stop WAS/application pools. Instead, it simply stopped/restarted website bindings in HTTP, resulting in some 503 and connection errors.

- Reboot server VM. We didn’t even do this one.

NOTE: After W3SVC restarted, it did trigger recycling of all application pools. Because recycling is zero downtime, this did not add any additional downtime to the test. Bottom line on this is, don’t do it, stick to recycling the right pool instead.

Conclusion

The bottom line is:

- IISRESET and restarting WAS are the worst ways to restart IIS websites.

- The overlapped application pool recycle is the clear winner for restarting IIS applications. You can read the full recommendations in our IIS restart guide.

That said, some production websites that experience poor cold start performance, or long startup delays, will experience difficulty in a production recycle.

To make sure your website can handle production restarts, with zero startup delay, and maintain always-warm performance, see our Maximum IIS application pool availability guide.