This performance rule measures the performance of the CLR Thread pool, which provides threads for most activities inside an ASP.NET/.NET application.

The rule tracks the speed with which the CLR Thread pool can provide threads for new tasks (the thread pool delay metric), AND the actual number of active CLR worker and IO threads in the thread pool in relation to the current thread pool limits and its ability to grow.

Why is this important

If the thread pool has too many active threads, it may have trouble providing additional threads necessary to process new requests and/or other application tasks. This can be due to slow thread pool growth, high Cpu utilization, GC activity, or exhausting the maximum number of threads allowed. If this happens, your application can experience severe performance degradation, and some cases, a complete/partial hang.

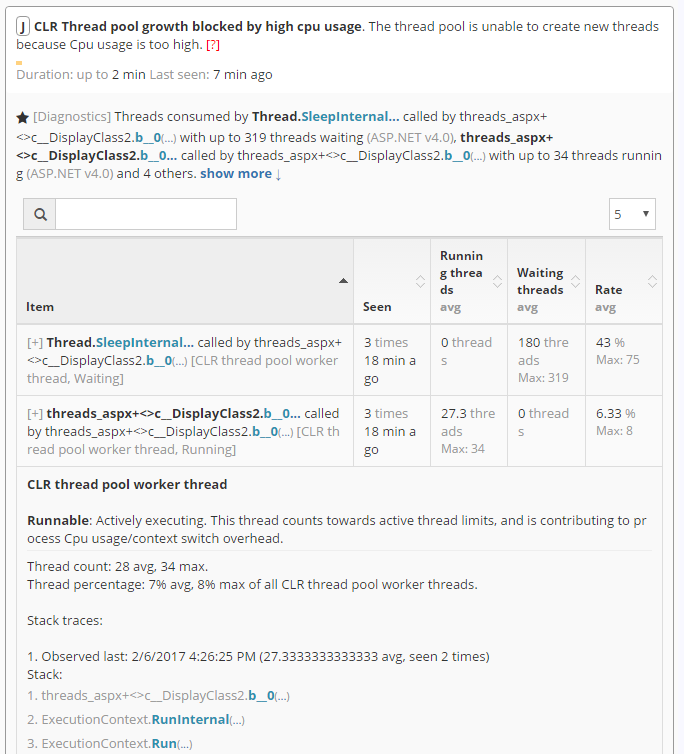

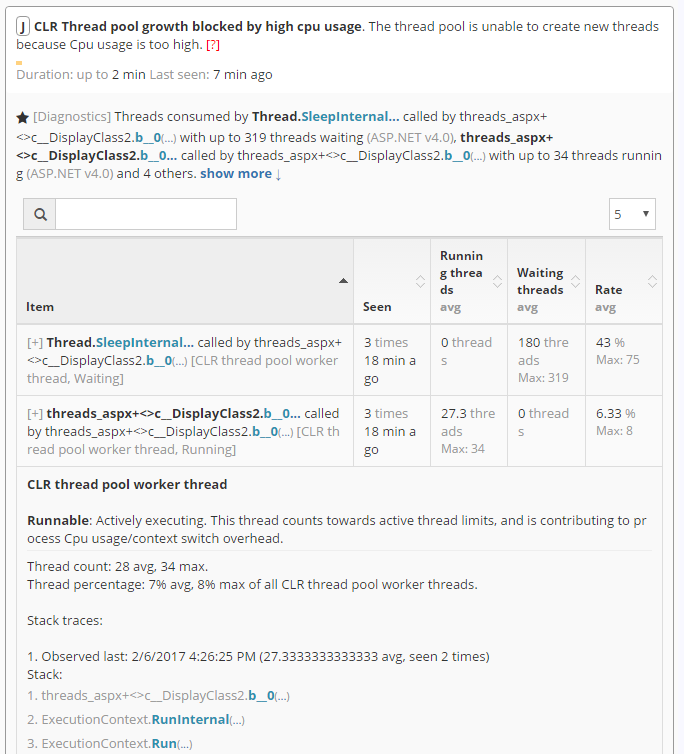

Diagnostics

This rule will attempt to automatically determine the reason for why the thread pool may be experiencing delays, starvation and/or exhaustion, including causes of thread blocking, and insufficient threadpool growth.

The diagnostics will additionally determine the code that is causing thread to become tied up, leading to thread pool exhaustion.

Review the stacks identified by the diagnostics for additional tagging that explains what kind of activity is taking place, and how the code may have been affecting the thread pool.

Improving this score

Review the causes for specific reasons that caused the thread pool performance to be degraded. Follow the recommendations for each cause to improve thread pool performance.

In most cases, the thread pool performance will be affected by code using/blocking too many threads. Review the stack traces that blocked the most threads, and work to reduce thread blocking in each location.

Pay the attention to the tagging done on each unique stack, e.g. the distinction between blocking/waiting stacks and executing stacks. For blocking stacks, you will need to find ways to reducing blocking times. For executing stacks that consume most threads, you likely need to reduce concurrency by implementing batching/queueing, or implement optimizations to reduce Cpu costs along the hot path.

Expert guidance

Additional assistance for implementing more scalable code may be available for Business plan customers, please contact support.

The rule tracks the speed with which the CLR Thread pool can provide threads for new tasks (the thread pool delay metric), AND the actual number of active CLR worker and IO threads in the thread pool in relation to the current thread pool limits and its ability to grow.

Why is this important

If the thread pool has too many active threads, it may have trouble providing additional threads necessary to process new requests and/or other application tasks. This can be due to slow thread pool growth, high Cpu utilization, GC activity, or exhausting the maximum number of threads allowed. If this happens, your application can experience severe performance degradation, and some cases, a complete/partial hang.

Diagnostics

This rule will attempt to automatically determine the reason for why the thread pool may be experiencing delays, starvation and/or exhaustion, including causes of thread blocking, and insufficient threadpool growth.

The diagnostics will additionally determine the code that is causing thread to become tied up, leading to thread pool exhaustion.

Review the stacks identified by the diagnostics for additional tagging that explains what kind of activity is taking place, and how the code may have been affecting the thread pool.

Improving this score

Review the causes for specific reasons that caused the thread pool performance to be degraded. Follow the recommendations for each cause to improve thread pool performance.

In most cases, the thread pool performance will be affected by code using/blocking too many threads. Review the stack traces that blocked the most threads, and work to reduce thread blocking in each location.

Pay the attention to the tagging done on each unique stack, e.g. the distinction between blocking/waiting stacks and executing stacks. For blocking stacks, you will need to find ways to reducing blocking times. For executing stacks that consume most threads, you likely need to reduce concurrency by implementing batching/queueing, or implement optimizations to reduce Cpu costs along the hot path.

Expert guidance

Additional assistance for implementing more scalable code may be available for Business plan customers, please contact support.

More resources

Want to learn the best techniques for managing your IIS web server? Join our how-to newsletter.

Want to get the best tool for troubleshooting and tuning your web apps? Try LeanSentry free for 14 days.